The fact that artificial intelligence can play games is not new. Chess algorithms in particular are already well known, but an AI is also successful in StarCraft 2. open AI it is now possible for the first time to allow an AI to produce a diamond pickaxe in minecraft, as first SingularityHub informed.

The first AI-generated diamond pickaxe

The San Francisco AI company isn’t taking AI’s first steps into the world of blocks, but past attempts by other developers haven’t necessarily gone very far. The difficulty lies in the non-linear gameplay with a procedurally generated game world with no clear goals, much unlike a chessboard or StarCraft map. in one blog post The nine-member development team now describes how a neural network was created based on around 70,000 hours of Minecraft YouTube videos. Many short shots show the AI in action, such as climbing, searching for food, smelting ore, or crafting items at the workbench.

The special feature: all this happens in survival mode, while earlier Minecraft AI who were often in creative mode or working in specially adapted and simplified input environments. For example, in the AI competition mineRLin which in 2019 none of the 660 participating teams managed to mine diamonds.

To our knowledge, there is no published work that operates in the full unmodified human action space, which includes drag-and-drop inventory management and item creation.

open AI

Meanwhile, OpenAI simulates classic mouse and keyboard inputs just like a real gamer would. However, the latter would not like the refresh rate used: the algorithm simulates with only 20 FPS to work with less computing power.

Video PreTraining keeps Nvidia Volta and Ampere busy for days

That paper about the project is freely accessible, as is the Code via GitHub. The researchers describe in detail the development of their artificial intelligence. They call their methodology Video PreTraining. First, around 2,000 hours of Minecraft videos were manually recorded, what actions the player is performing at any given moment, i.e. what keys they press, how they move the mouse and what they actually do in the game. who became one Inverse dynamic model trained to provide appropriate descriptions for 70,000 plus hours of Minecraft videos.

Basically, an algorithm was first written that tries to recognize which of the player’s inputs led to the sequence shown in the game cutscenes. Individual actions were isolated and before and after gameplay was considered. This is much easier for the AI than simply classifying what’s happening based on the past: the algorithm in its model doesn’t have to first try to predict what the player actually plans to do in the video, the researchers explain. .

In order to make use of the vast amount of unlabeled video data available on the Internet, we present a novel, but simple, semi-supervised imitation learning method: Video PreTraining (VPT). We started by collecting a small dataset from contractors where we recorded not only their video, but also the actions they took, which in our case are keystrokes and mouse movements. With this data we train an inverse dynamics model (IDM), which predicts the action being taken at each step of the video. […]

We chose to validate our method on Minecraft because, firstly, it is one of the most widely used video games in the world and therefore has a large amount of freely available video data, and secondly, it is open with a wide variety of things to do, similar to real-world applications, such as using computers. […]

open AI

A total of 32 Nvidia A100 graphics accelerators were used to find out which inputs belong to the actions: the Ampere GPUs still needed around four days to see and process all the material. The independent logging of the 70,000 hours of Minecraft videos took even longer, around nine days, even though a full 720 Volta Tesla V100 GPUs were used.

Behavioral Cloning brings the first stone pickaxe

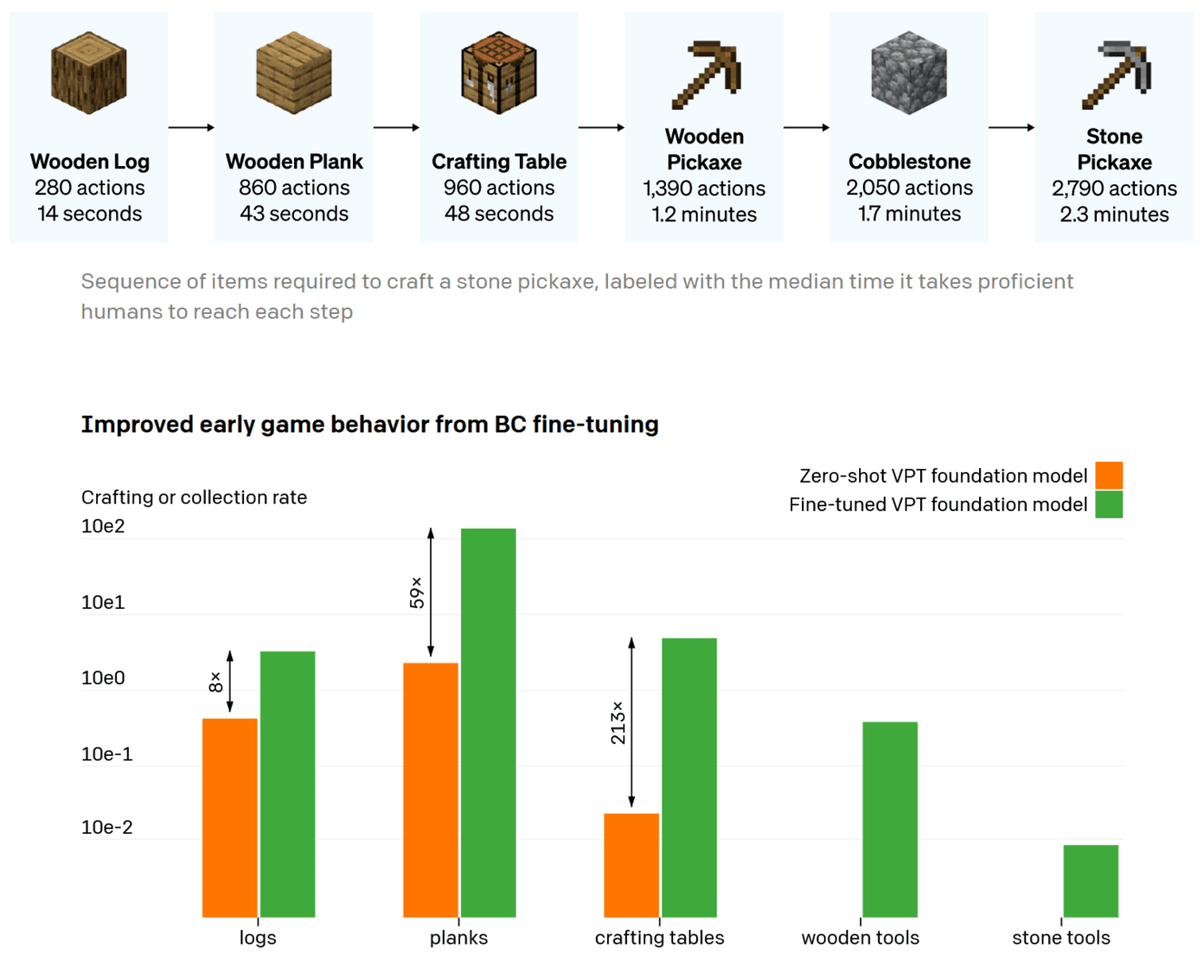

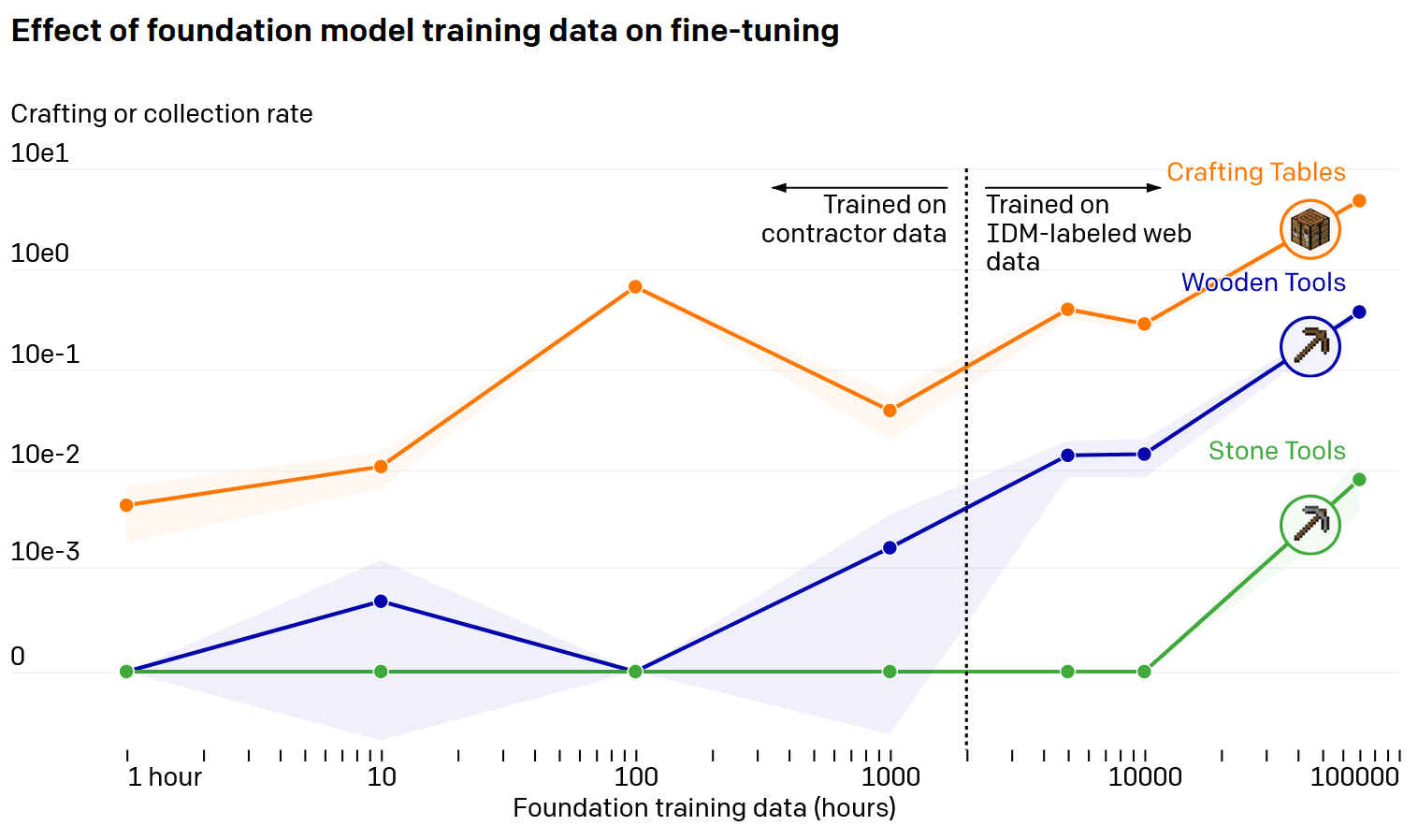

The result: the neural network manages to walk in Minecraft, cut down trees, process wood, and even make a workbench. But that didn’t go far enough for OpenAI developers; with the concept of behavioral cloning the AI should be further optimized. The fine-tuning was to feed the algorithm a more specific game to train it for a specific behavior, for example building a small house or the typical course for the first ten minutes of the game. A100 graphics cards were used again, but this time there were only 16 of them. It took them about two days to do the calculations.

And in fact: the researchers observed significant improvements in their AI. For example, felling the first tree only took about an eighth of the time. Wood plank processing efficiency increased by a factor of 59; when making a workbench, it was even a factor of 215. Also, the neural network now crafted wooden tools, quarried stone, and then crafted a stone pickaxe. For this purpose, the algorithm was fed around 10,000 hours of video; for wooden tools, on the other hand, 100 hours of training was enough.

Reach your goal with reinforcement learning

As the next and last step, the OpenAI team finally opted for a more fine-tuning reinforced learning. The training was now even more specific, and the AI was given a clearly defined goal: it should make a diamond pickaxe. This apparently included finding, mining, and smelting iron ore, making an iron pickaxe, and ultimately searching for diamonds. Thanks to specific training, the neural network was able to do all this even faster than the average Minecraft player: the diamond pickaxe was ready after about 20 minutes.

One difficulty was the intermediate goals: if you gave the AI the task of looking for iron with a stone pickaxe you just made, it dug it right after crafting and left the workbench behind; this took more time to then produce the iron. peak. As a result, another intermediate goal was necessary: to disassemble and collect the workbench; furthermore, the different objectives had to be weighted differently.

Once again, processing the necessary steps of the game required a lot of computing power: 56,719 CPU cores and 80 GPUs were busy with machine learning for about six days. The algorithm went through a total of 4,000 successively optimized iterations, with a total of 16.8 billion frames read and processed.

Possible additional application in and out of Minecraft

The nine researchers see great potential in the recently tested Video PreTraining methodology. The concept can be easily transferred to other situations, it is said, and applications outside of video games are also conceivable. The advantage here is that the AI was designed to handle mouse and keyboard input. However, for further development of the neural network, the one starting on July 1 is also trusted. MineRL 2022 Contest. Participants can use the OpenAI model to train artificial intelligence for other specific goals; maybe this will soon lead to the first Netherite peak.

VPT paves the way to enable agents to learn how to act by watching the vast amount of video on the Internet. Compared to generative video modeling or contrastive methods that would only produce representational antecedents, VPT offers the exciting possibility of directly learning large-scale behavioral antecedents in more domains than just language. While we only experimented in Minecraft, the game is very open and the native human interface (mouse and keyboard) is very generic, so we think our results bode well for other similar domains, for example, the use of computers.

We are also opening up our contractor data, the Minecraft environment, model code, and model weights, which we hope will help future VPT research. Additionally, this year we have partnered with the MineRL NeurIPS competition. Contestants can use and refine our models to try to solve many difficult tasks in Minecraft.

open AI

Was this article interesting, useful, or both? Publishers are happy with any ComputerBase Pro support and ad blockers disabled. More information about ads on ComputerBase.

Introvert. Beer guru. Communicator. Travel fanatic. Web advocate. Certified alcohol geek. Tv buff. Subtly charming internet aficionado.